This is the third post in my Training Intelligence series, where I’m documenting my journey building a personalized AI running coach. See the full series here

The Architecture Crossroads

After spending some time thinking about the training science, the next question: how should my AI coach actually think? Actually, after figuring out “why” (in the first post of the series) and looking at the options/tools I have to understand what training means, now it’s time to answer the “how?” question.

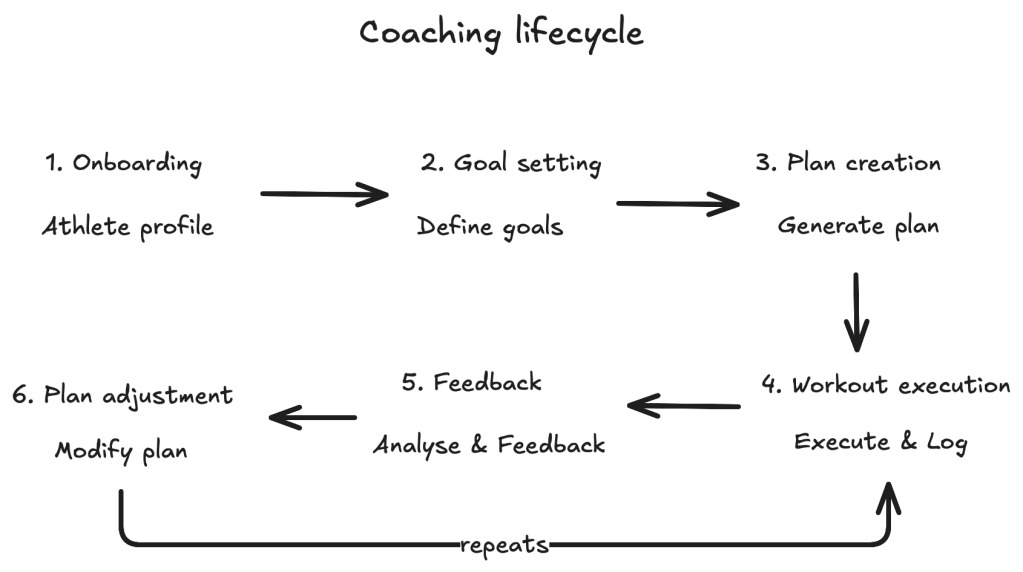

If you look at the mapping of activities for the coach, you can identify the main block of actions that need to be supported by the system.

- Onboarding System – Meet and get to know

The purpose of this system is to build a comprehensive profile of the athlete through data collection and analysis. This is mainly done by historical data importer, also supported by converting the data into meaningful elements (PB, historical load, LT, VDOT, volumes and patterns, injury log)

2. Goal setting System

The purpose of this system is to translate the athlete aspiration into structured, achievable objectives.

This understands the various goals (time, completion or fitness) and plays a reality checker against the historical info (is 2:30h marathon realistic in 3 months considering the current 4:00h?), calculates the required improvement rate, identified immediate milestones and flags unrealistic timelines.

3. Plan Generation System

The purpose of this system is to create periodized training plan aligned with the goals and athlete capacity.

It needs to cover:

- Macrocycle designer

- phase calculator (base, build, peak, taper timing)

- volume progression algorithm

- Mesocycle builder

- Weekly pattern generator

- workout type distributor

- recovery week scheduler

4. Workout execution & ingestion system

The purpose of this system is to capture and standardize workout data from multiple sources (direct upload for example).

It needs to have a parsing capability for FIT/TCX/GPX files, with potential to connect to external platforms through APIs. It should normalize and handle the missing data gracefully.

5. Analysis & Feedback System (The Core AI Coach)

The purpose of this system is to provide intelligent, contextual feedback on workout execution.

For that purpose, it needs to have a workout comparator (prescribed vs actual), identify patterns across workouts and also create some feedback based on the LLM powered natural language capability.

6. Plan Adjustment System

This system has the purpose of dynamically modify the training plan based on the actual progress and response. It needs to be able to analyze the trends and assess the goals, create short term modifications (next workout this week) or long term plan shifts, while keeping an eye on safety (injury risk and overtraining).

There are three paths to intelligence

Option 1 – the rules engine (traditional)

if (acute_load / chronic_load > 1.5):

return "High injury risk, reduce volume"

elif (days_to_race < 14):

return "Taper phase: maintain intensity, reduce volume"

This would require a very good inventory of all rules and apply them into functions.

Pros:

- predictable and testable

- no hallucinations risk

- runs locally, protects privacy (unless you decide to host everything in the cloud)

Concerns:

- rigid approach – endless edge cases to code

- can’t handle context too well (“I’m stressed at work ..”)

- feels like a calculator and not a coach (it’s something everyone could do if they calculate their stats and have a good running book to guide them)

This is a complex endeavour… and maybe one of the reasons even the big companies like Garmin didn’t actually gain so much traction with their coach implementation (or at least they didn’t gained the praise with those but rather they were more appreciated for the DSW – Daily Suggested Workout implementation – which is more like a hybrid solution – rules + ML)

Option 2 – Pure AI (The LLM dream)

prompt= f"""

You are an expert running coach.

Athlete stats: {profile}

Recent workouts: {history}

Goal: {marathon_in_12_weeks}

What should they do this week?

"""

Just let GPT-4 or Claude or Gemini handle everything – upload your data, get coaching. That was the way I used during the recent weeks. And it worked reasonably well.

Pros:

- conversational and adaptative

- understands nuanced context

- no complex coding

- constantly improved with the model updates

Concerns:

- can hallucinate

- could be expensive at scale (not as much for personal use)

- there is not guarantee of physiological soundness (you need still to read some books to “check” the advice)

- privacy concerns with sending health data

- “black box” decision you can’t debug

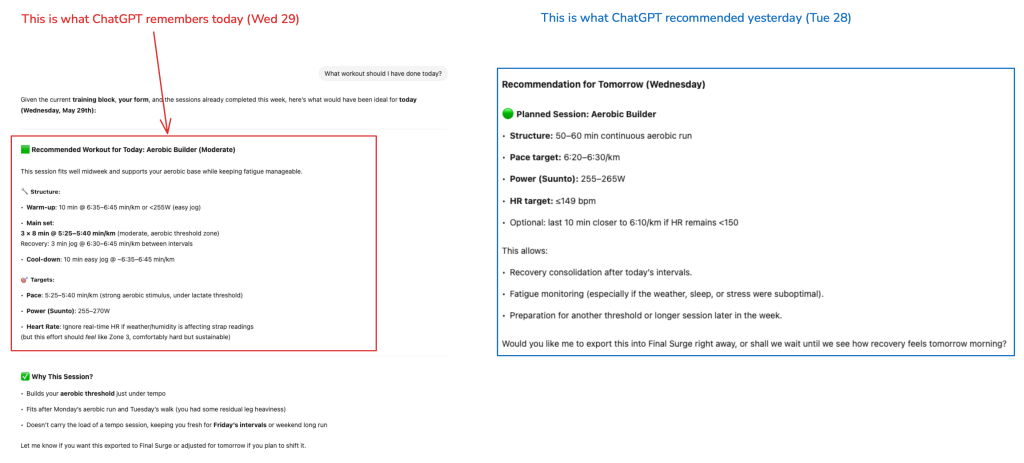

One of the issues with this (easier) approach is the fact that the memory of the LLM is not particularly strong in regular situations … just one example from today.

Before uploading the results of the run today, I asked ChatGPT what was the workout prescription for today … it took a while until it generated the below (left side) answer .. which is not accurate. ChatGPT tried its best .. it remembered that I was supposed to have an Aerobic Builder session, but the intensity was different .. and also added some invented history as I shared with ChatGPT the sessions of the week and they weren’t those mentioned on Monday-Tuesday.

So basically, this hallucination is not a surprise..ChatGPT tried to be cohesive and coherent at all cost (including the truth), but that says that you need to create explicit context and reference for the LLM, otherwise you’ll get more creativity than coherence. So yes, you need to store the plan and version it, and provide that plan to the LLM as reference, otherwise the plan will be more or less different every time, as the LLM is only looking at the present or future.

Option 3: The Hybrid Intelligence

This option tries to combine the algorithmic precision with AI adaptability.

How a practical example would look like, in a real scenario?

Me: “Did 6x800m on the track. Average 3:15, HR hit 175bpm. Felt harder than usual” -> sure that can be also extracted from the FIT file.

And now let’s see how the systems would work, depending on the options.

Rules Engine Response

WORKOUT ANALYSIS:

- Pace: 4:04/km (103% of threshold)

- HR: 175 (92% of max)

- Training Load: 89 TRIMP

- Status: COMPLETED AS PRESCRIBEDIt reflects the reality, but this is far from being personal. You wouldn’t like to work with this coach.

Pure AI Response

Great job on completing your interval session! Running 8x800m at 3:15 is solid work. If it felt harder than usual, you might want to ensure you're getting enough rest. How about an easy run tomorrow? Also, have you been hydrating well? Sometimes dehydration can make workouts feel tougher. Keep up the great work! 💪Encouraging but vague. What does “easy run” mean? How does this fit in my plan? Sure, with the right follow-up questions, you may get there.

Hybrid Intelligence Response

Your 800s were right on target pace-wise (4:04/km is 103% of your current threshold). However, your HR hitting 175 (92% max) combined with the higher perceived effort suggests you're carrying some fatigue.

Looking at your profile, you've accumulated 340 TRIMP over the last 7 days – that's 15% above your typical week.

Tomorrow's scheduled tempo run should become an aerobic maintenance run instead: 8-10km at 5:45-6:00/km pace, keeping HR under 145. This will maintain your fitness while allowing recovery. We can reassess Thursday for your next quality session.This combines:

- Precise calculations (algorithmic layer)

- Context awareness (AI understanding “felt harder”)

- Personalized thresholds (from my profile)

- Actionable advice (specific paces/HR)

- Adaptive planning (modifying the schedule)

The Data Flow – How information becomes insight

You can find below an idea on the flow of information and how things evolve in the data transformation/use.

Things will become a bit more complicated as I will work more on the details of the implementation, and particularly on the hand-shake between the algorithmic part and the LLM part.

What’s Next

With the architecture decided, it’s time to get practical. The next post will cover [Building the Foundation: From FIT Files to Insights] – where I’ll show the actual implementation of the algorithmic core.

Because before we can enhance with AI, we need rock-solid data processing. And that journey starts with parsing those cryptic raw files…

Training Intelligence Series: See all posts

Previous: Learning the Science

Next: Building the Foundation (coming soon)

Discover more from Liviu Nastasa

Subscribe to get the latest posts sent to your email.

One thought